Overview

The Cloud-Native Kafka, It was designed to a robust and high-throughput, adept at handling vast volumes of data with unparalleled efficiency. Its core attributes, such as High Throughput and Low Latency, make it an ideal choice for applications that demand rapid and consistent data transfer. With its inherent Reliability and Replayability, Cloud-Native Kafka ensures that no piece of data is ever lost in the shuffle. Whether you’re dealing with high-frequency trading platforms in the DeFi or managing** large-scale dApp** user activities, Its capabilities offer a distinct advantage in the bustling world of Web3.

Scenarios

- High-frequency Trades Analysis in DEX: Cloud-Native Kafka’s ability to handle large amounts of real-time trade data provides exchanges with instant trade analytics, aiding traders in making more informed decisions. Its high throughput ensures that during peak trading times, every piece of trade data is accurately captured.

- Liquidity Monitoring in DeFi: In DeFi, the rate of liquidity changes can be swift. Cloud-Native Kafka tracks these changes in real-time, offering users the latest liquidity information, thereby assisting them in making timely investment decisions.

- Real-time in NFT Markets: In NFT markets, tracking its dynamics event in real-time becomes crucial. Cloud-Native Kafka captures every purchase, sale, or auction event, offering market participants the latest market trends.

Benefits

Here are the core benefits the Chainbase Cloud-Native Kafka could offer:- High Throughput: Tailored for large-scale Web3 data updates, The Cloud-Native Kafka ensures that data transmission is done efficiently and at unparalleled speeds.

- Reliability: With redundant data storage across a distributed system, Chainbase Cloud-Native Kafka guarantees data preservation and accessibility, and keep the service 7/24 reliable.

- Low Latency: Benefit from near-instantaneous data transmission with Kafka’s low latency feature. Whether it’s for real-time analytics or time-sensitive applications, Kafka ensures minimal delay from data generation to consumption.

- Replayability: Dive back into past data streams at any point, enabling a comprehensive review and ensuring Web3 data integrity and accuracy over time.

Quick Start

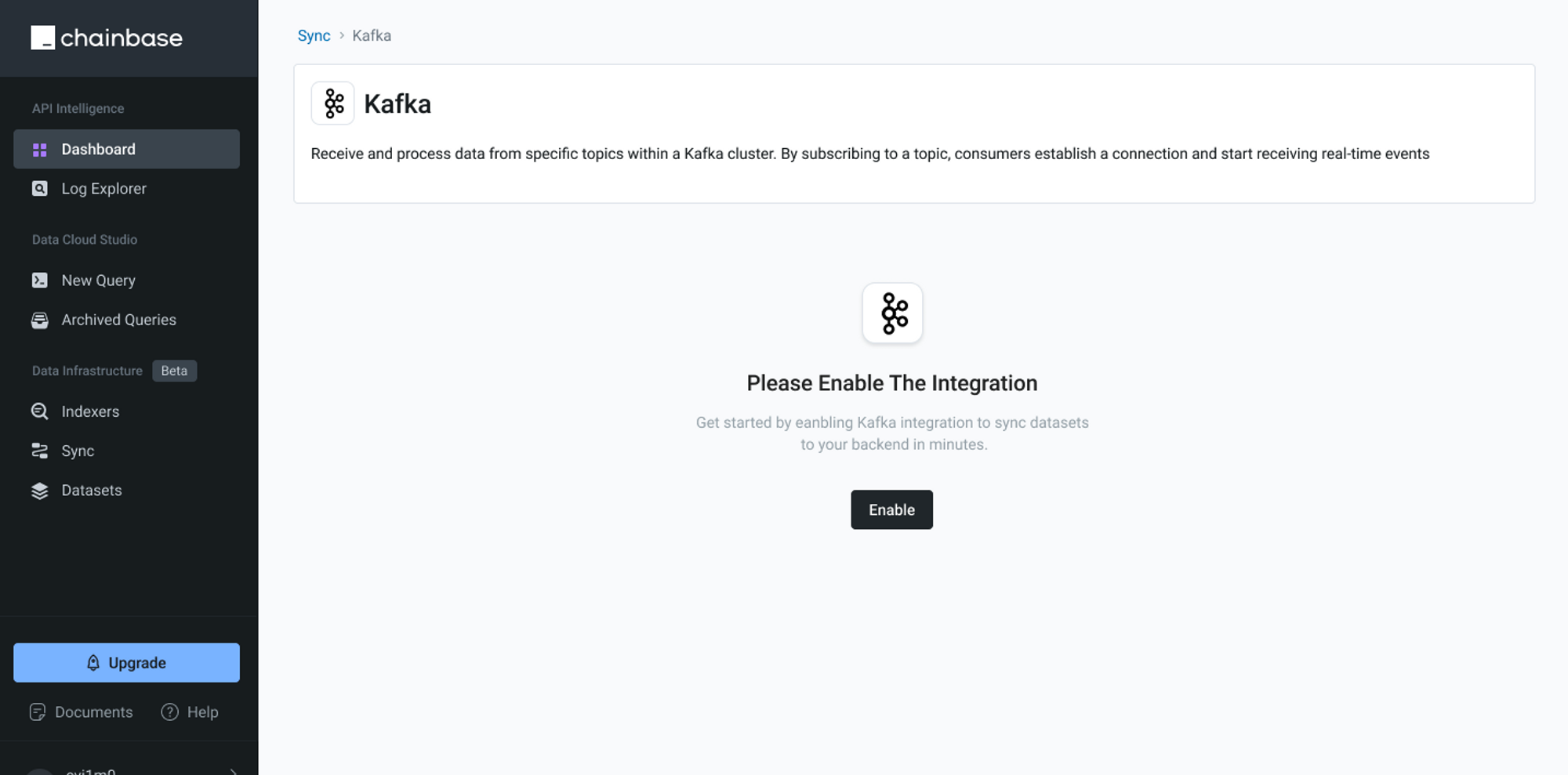

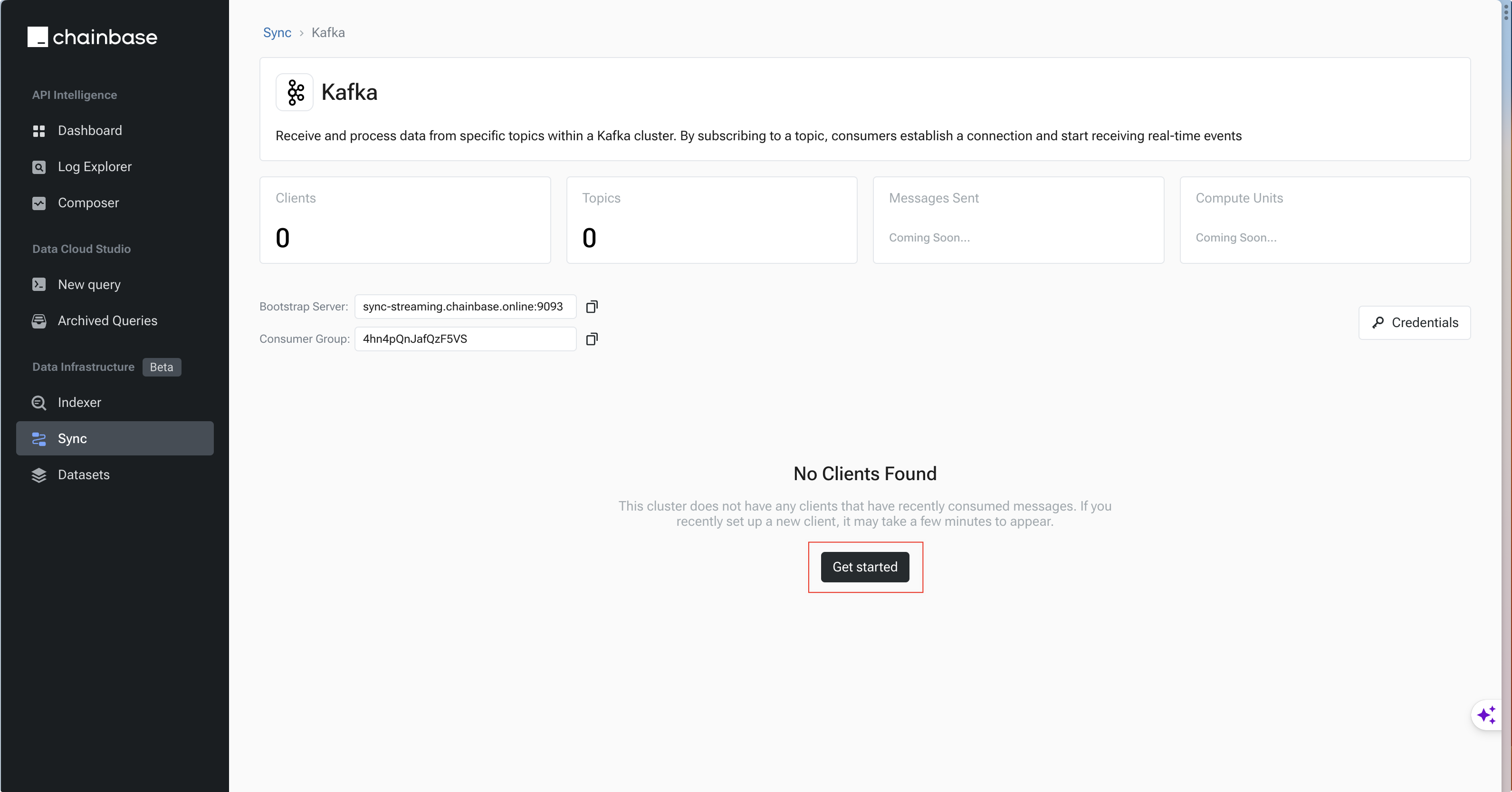

1. Enable Kafka Integration

When you enter theSync - Kafka integration, you will see the integration-enable panel as below. Just click the enable button to turn on the integration. the system would automatically initialize the Consumer-Group which bind to account.

Enable Kafka Integration

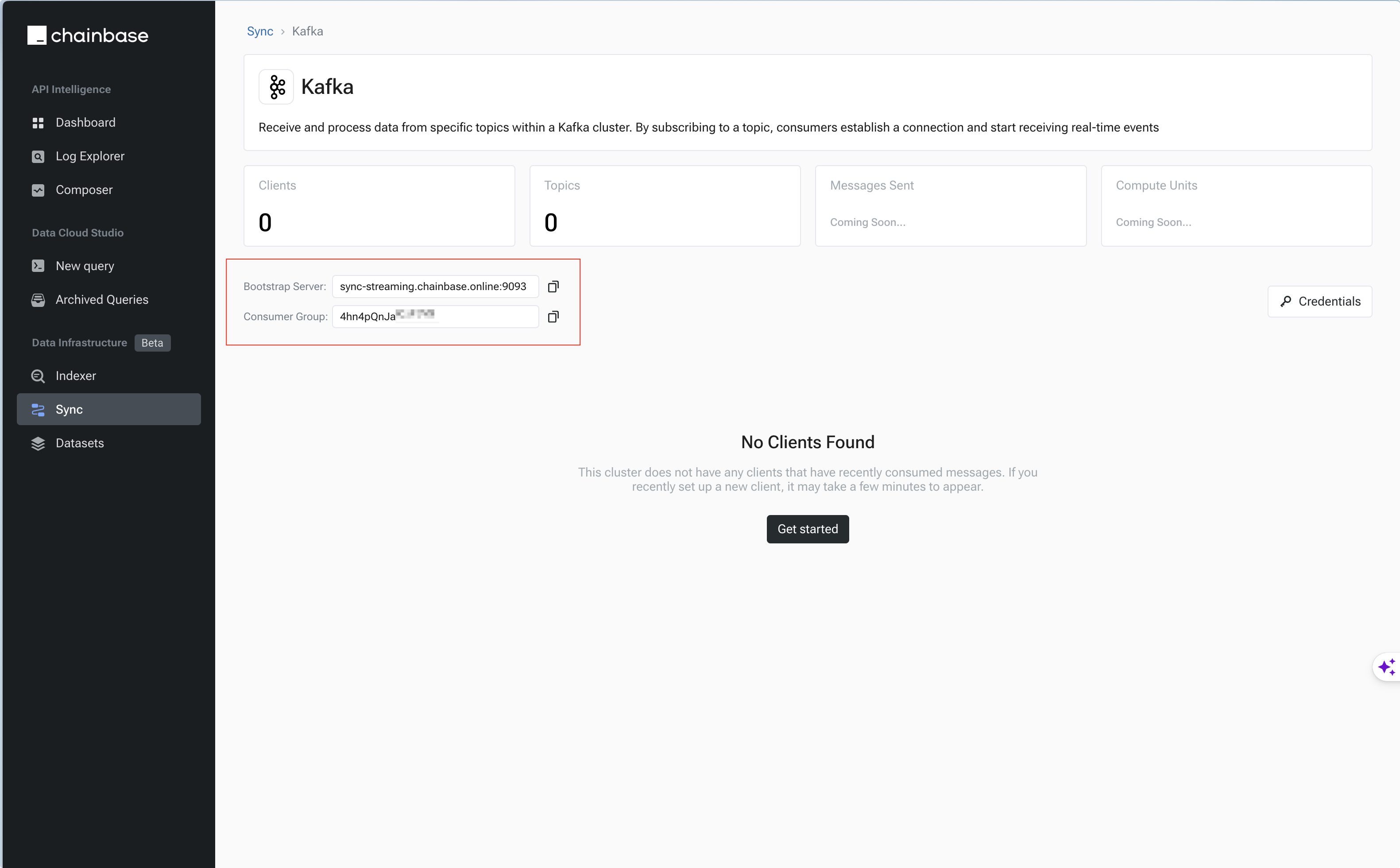

BootStrap Server & Consumer Group

Bootstrap Servers & Consumer Group connection information has show on the top-left.

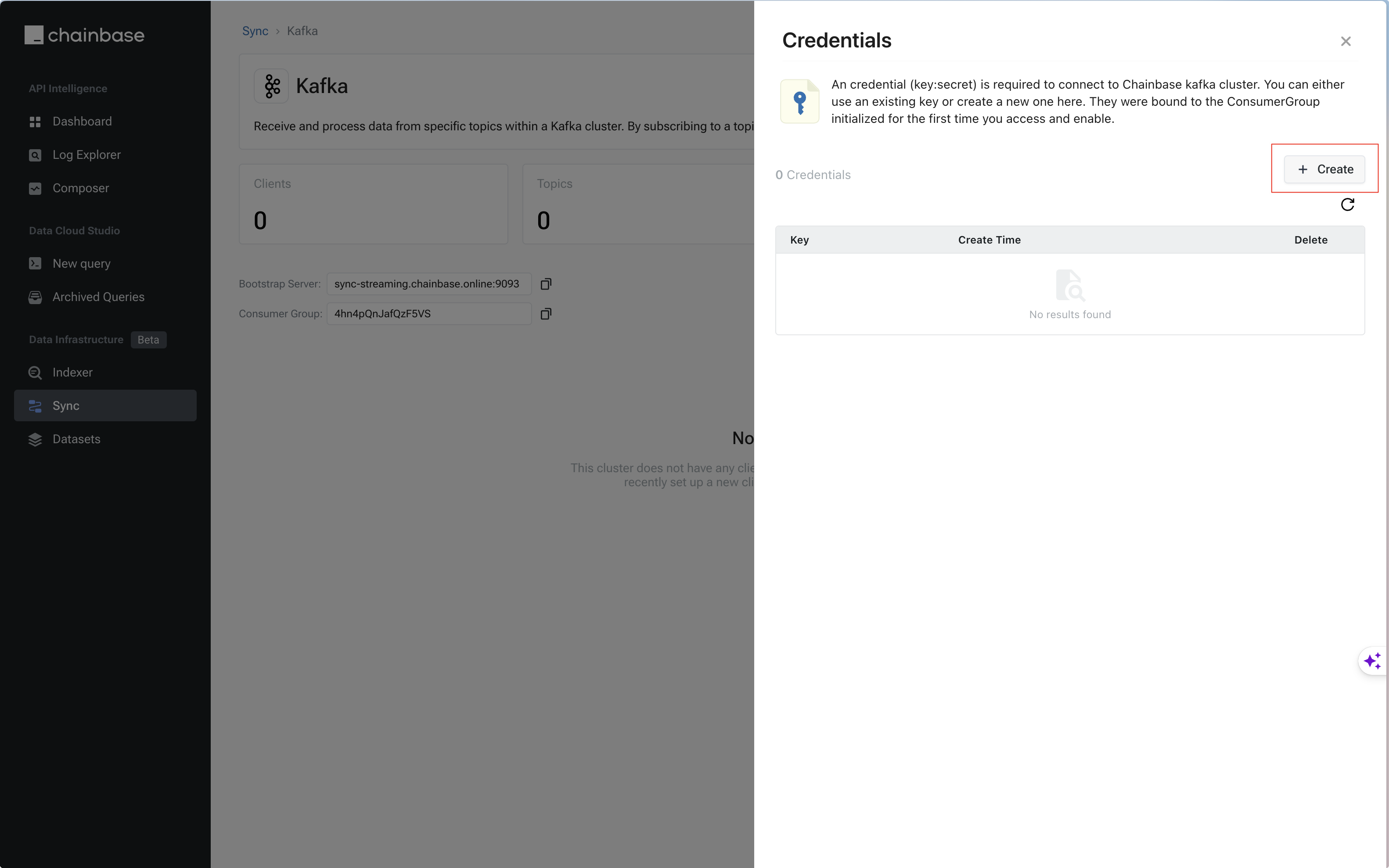

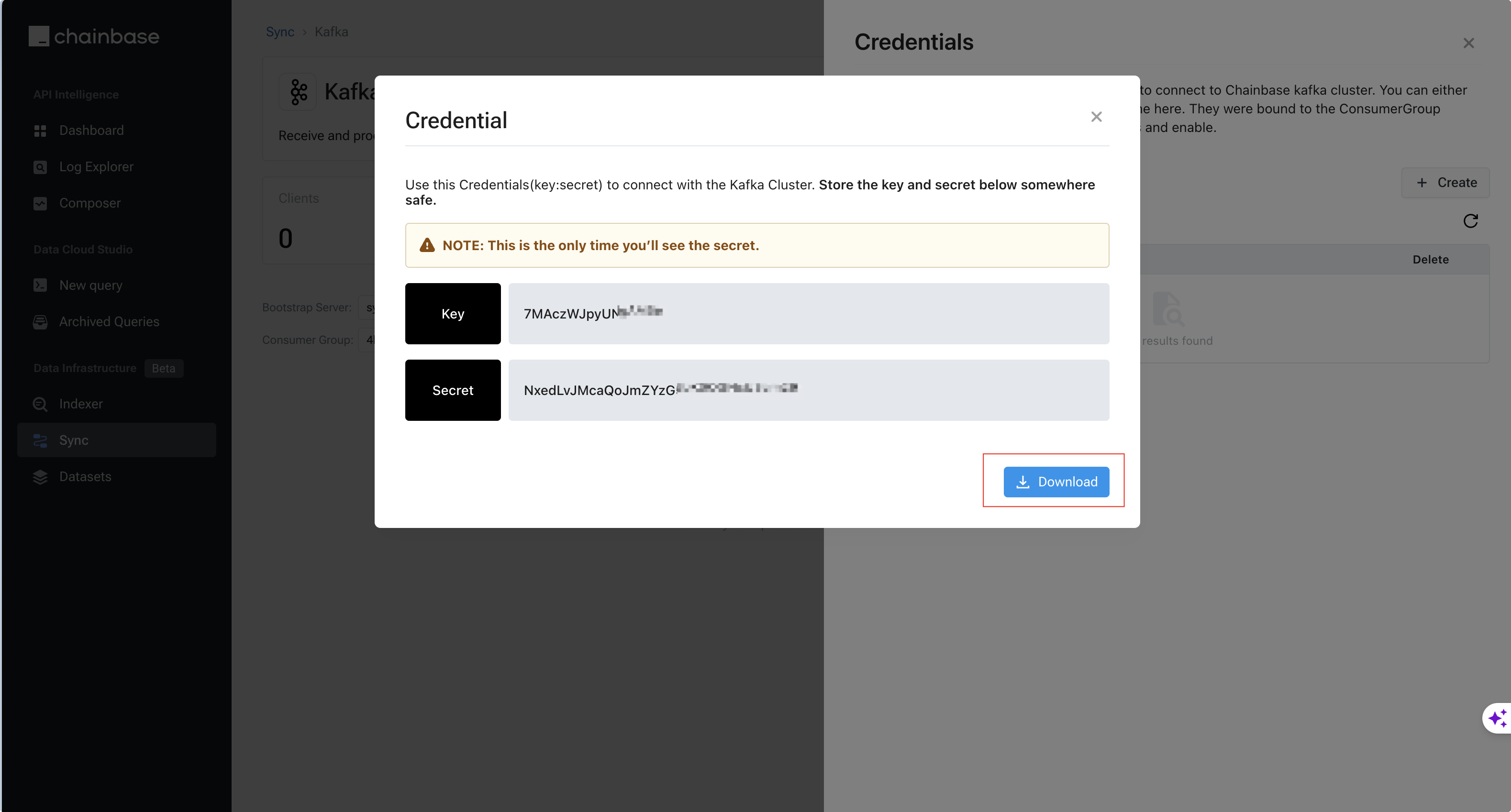

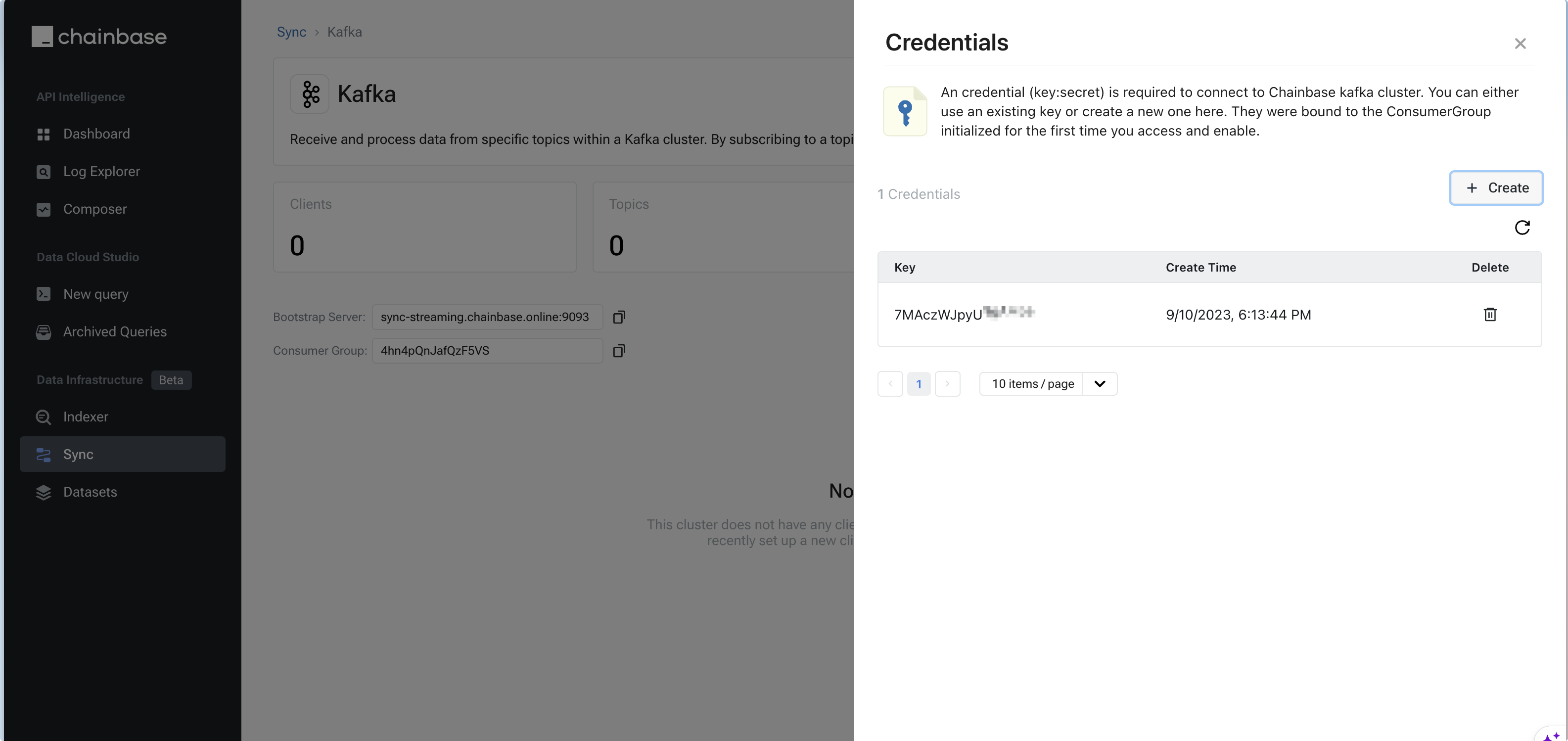

2. Create Kafka Credentials(Key:Secret)

And next, you must to create a credentials before you need to connect the Cloud-Native kafka. Just click theCredentials to create.

create button, the credentials will be initialized and show on the popup

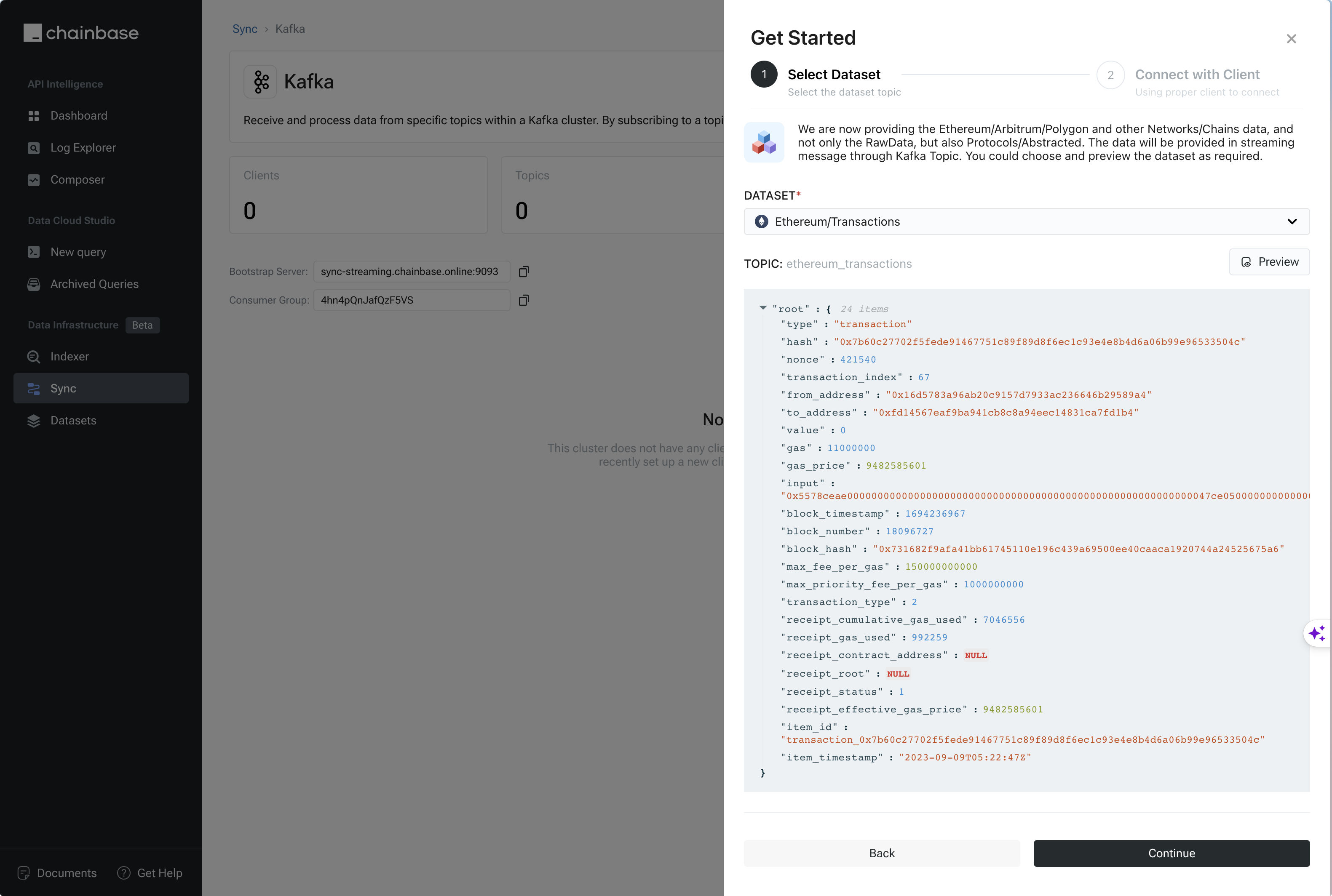

3. Choose the dataset to preview the format

Preview dataset format

Supported Topics

When using kafka to consume data, you can use the topics listed in the table below as theKafka Topic.

| Network | Kafka Topics |

|---|---|

| ethereum | ethereum_blocks ethereum_contracts ethereum_erc20_transfer ethereum_transactions ethereum_logs ethereum_traces |

| optimism | optimism_blocks optimism_contracts optimism_erc20_transfer optimism_transactions optimism_logs optimism_traces |

| bsc | bsc_blocks bsc_contracts bsc_erc20_transfer bsc_transactions bsc_logs bsc_traces |

| polygon | polygon_blocks polygon_contracts polygon_erc20_transfer polygon_transactions polygon_logs polygon_traces |

| avalanche | avalanche_blocks avalanche_contracts avalanche_erc20_transfer avalanche_transactions avalanche_logs avalanche_traces |

| arbitrum | arbitrum_blocks arbitrum_contracts arbitrum_erc20_transfer arbitrum_transactions arbitrum_logs arbitrum_traces |

| base | base_blocks base_contracts base_erc20_transfer base_transactions base_logs base_traces |

| zksync | zksync_blocks zksync_contracts zksync_erc20_transfer zksync_transactions zksync_logs zksync_traces |

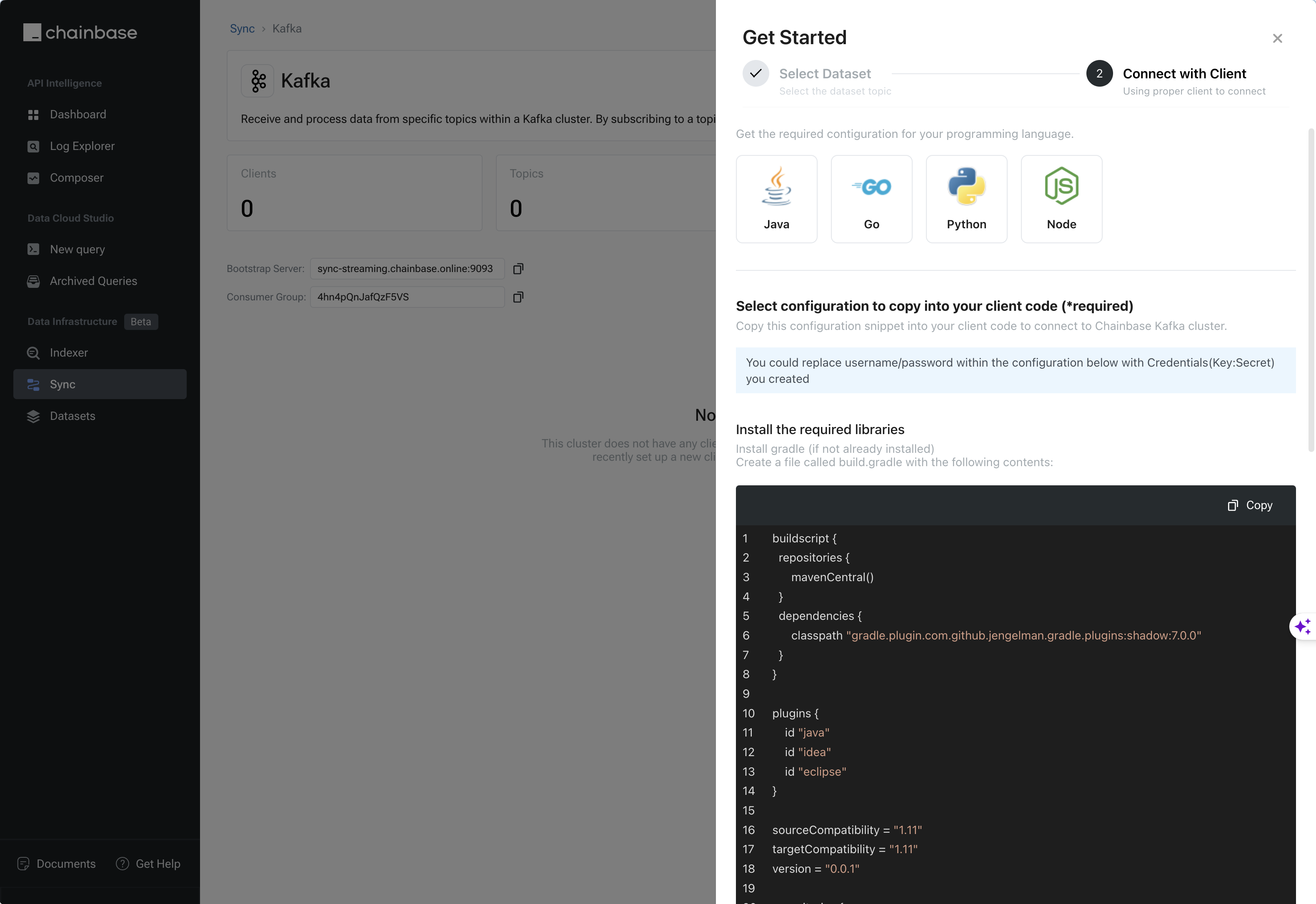

4. Connect with Credentials

Just continue with the button, The examples of different language would be show to u as thestep-2 connect with client

References

The Cloud-Native Kafka: Boosting Data Synchronization Reliability and Consistency